How long should I make my PySpark Developer resume?

For a PySpark Developer resume, aim for 1-2 pages. This length allows you to showcase your relevant skills, experience, and projects without overwhelming recruiters. Focus on your most impactful PySpark projects, big data experience, and technical proficiencies. Use concise bullet points to highlight your achievements and quantify results where possible. Remember, quality trumps quantity, so prioritize information that directly relates to PySpark development and data engineering roles.

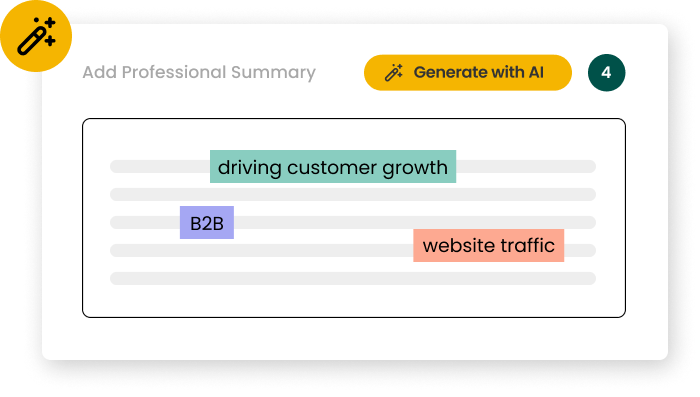

A hybrid format works best for PySpark Developer resumes, combining chronological work history with a skills-based approach. This format allows you to showcase your technical expertise in PySpark, Scala, and big data technologies upfront, followed by your work experience. Key sections should include a technical skills summary, work experience, notable projects, and education. Use a clean, modern layout with consistent formatting. Consider using subtle visual cues like icons to represent different programming languages or tools you're proficient in.

What certifications should I include on my PySpark Developer resume?

Key certifications for PySpark Developers include Databricks Certified Associate Developer for Apache Spark, Cloudera Certified Developer for Apache Hadoop (CCDH), and AWS Certified Big Data - Specialty. These certifications validate your expertise in big data processing, distributed computing, and cloud-based data solutions. When listing certifications, include the year obtained and any expiration dates. Consider creating a dedicated "Certifications" section on your resume, placing it prominently after your skills summary to immediately showcase your credentials to potential employers.

What are the most common mistakes to avoid on a PySpark Developer resume?

Common mistakes on PySpark Developer resumes include overemphasizing general programming skills without showcasing specific PySpark projects, neglecting to highlight experience with distributed computing and big data frameworks, and failing to quantify the impact of your work. To avoid these, focus on PySpark-specific achievements, detail your experience with tools like Hadoop and Kafka, and use metrics to demonstrate the scale and efficiency of your projects. Additionally, ensure your resume is ATS-friendly by using standard section headings and incorporating relevant keywords from the job description.